Netlas API (v1)

Download OpenAPI specification:

To interact with the Netlas API, you will need an API client or at least the curl command-line utility. Alternatively, you can use the Netlas CLI tool or the Netlas Python SDK.

This specification includes code samples for all of these options.

Setting Up an API Client

Most modern API clients support OpenAPI v3 imports. Click Download at the top of this page, then import the file into your preferred API client.

✅ We've confirmed that imports work smoothly in the following clients:

| API Client | Steps to Import |

|---|---|

| Postman | In Import Settings, set Folder Organization to Tags for better structure. |

| Insomnia | Collections → Create, then click the collection name in the top-left corner and choose Import From File. |

| Bruno | Click Import Collection → OpenAPI V3 File, then choose a local folder to store the workspace. |

| Scalar | Don’t forget to configure the API key in the collection's authentication settings. |

Netlas CLI Tool

The Netlas CLI tool is ideal for users who prefer the command line or Bash scripting. It allows you to interact with Netlas just like any other terminal application.

To install the CLI via Homebrew:

brew tap netlas-io/netlas

brew install netlas

Or install it using pipx:

pipx install netlas

Netlas Python SDK

The Netlas Python SDK is designed for Python developers. It provides a convenient way to work with the API, with built-in handling for common errors.

Install the SDK using pip:

pip install netlas

The Netlas CLI tool is included with the SDK, so it becomes available automatically after SDK installation. Both the Netlas Python SDK and CLI tool are open-source and available under the MIT license in the Github Netlas repository.

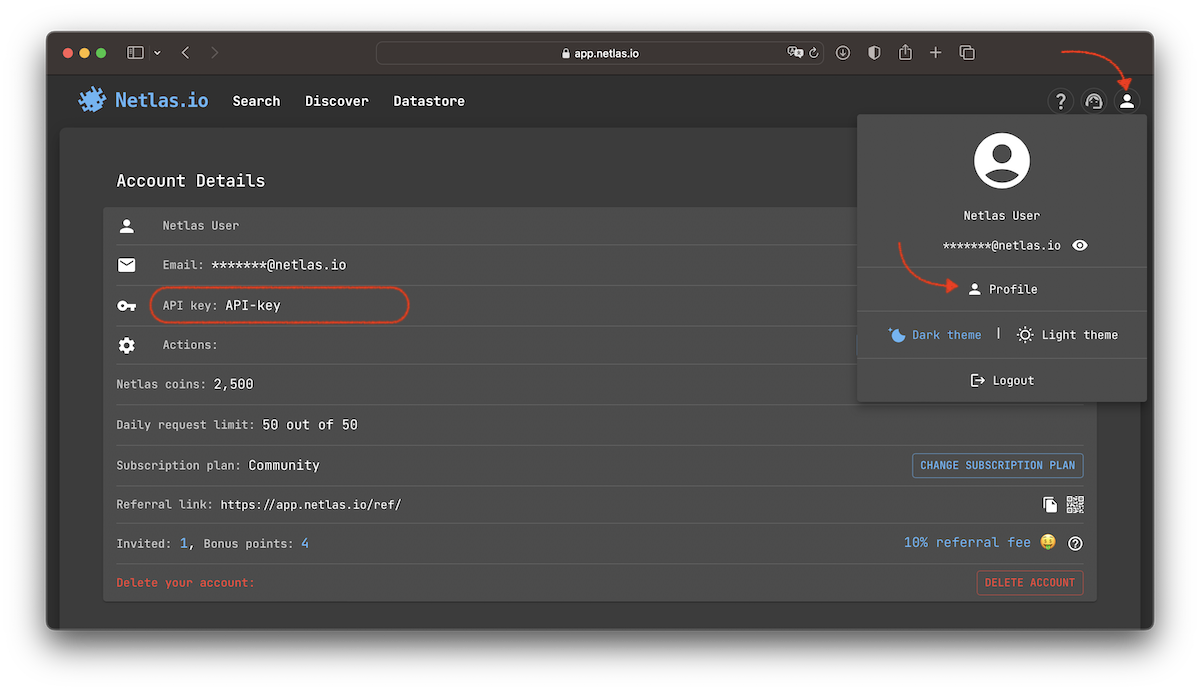

Each registered Netlas user receives a unique API key. You can find your API key on the profile page, accessible from the top-right menu in the web application.

Include your API key as a Bearer token in the Authorization header to authorize your requests. The curl examples in this manual will work as-is if you export the API key as the NETLAS_API_KEY environment variable:

export NETLAS_API_KEY=put_your_key_here

curl -X 'GET' "https://app.netlas.io/api/host/" \

-H "Authorization: Bearer $NETLAS_API_KEY"

Netlas API authorization follows RFC 6750, The OAuth 2.0 Authorization Framework: Bearer Token Usage.

The

X-API-Keyheader was previously used for authentication and is still supported, but considered deprecated and may be removed in future versions.

If you are using the Netlas CLI or SDK, securely save your API key locally:

netlas savekey "YOUR_API_KEY"

The Netlas CLI tool automatically uses the saved key.

To fetch saved key in Python:

import netlas

api_key = netlas.helpers.get_api_key()

⚠️ FYI: You may be eligible for a special subscription if you're using Netlas for educational purposes, building an integration, or writing for a blog or journal.

Read more →

To ensure fair usage and optimal performance, the Netlas API enforces the following rate limits:

- 60 requests per minute for search and count operations, excluding certificate searches.

- 3 requests per minute for search and count operations involving certificate data collections.

If these limits are exceeded, the API responds with Error 429 (Too Many Requests) and includes a Retry-After header indicating the number of seconds to wait before retrying.

The Netlas CLI and SDK are configured by default to automatically handle rate limit errors.

When using the SDK, you can customize this behavior by setting the throttling and retry parameters in the search methods.

This allows you to either disable automatic handling or specify the number of retry attempts.

To manage rate limiting manually you you can catch and handle the ThrottlingError exception in your code:

import netlas

import time

nc = netlas.Netlas()

for i in range(65):

try:

nc.host(host=None, fields=["*"], exclude_fields=True, throttling=False)

except netlas.ThrottlingError as e:

time.sleep(e.retry_after) # Wait for the specified time before retrying

⚠️ Important: Always handle Netlas API errors correctly. Ignoring rate limits or other errors may result in temporary access restrictions. Read more about blocking policy in the FAQ section.

Retrieves a summary of Netlas data for a specific IP address or domain name.

The Host Info endpoint supports queries by IP or domain only, but returns aggregated data from all Netlas data collections in a single response.

The same data available in the Netlas web interface via the IP/Domain Info tool.

Host Summary

Retrieve the latest available data for {host}.

path Parameters

required | IPv4 (string) or Domain (string) The IP address or domain name for which data should be retrieved. |

query Parameters

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=ip fields=ptr[0],whois.abuse You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

| public_indices_only | boolean (public_indices_only) Default: false Example: public_indices_only=true By default, data is requested from both private and public indices, returning the most recent results. Use this query parameter to restrict the search to public indices only. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

# IP query example curl -s -X GET \ "https://app.netlas.io/api/host/23.215.0.136/" \ -H "Authorization: Bearer $NETLAS_API_KEY" # Domain query example curl -s -X GET \ "https://app.netlas.io/api/host/example.com/" \ -H "Authorization: Bearer $NETLAS_API_KEY" # Filtering output curl -s -X GET \ "https://app.netlas.io/api/host/google.com/" \ -G \ --data-urlencode 'fields=whois.registrant.organization' \ --data-urlencode 'source_type=include' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 429

- 500

- 504

{- "type": "ip",

- "ip": "23.215.0.136",

- "ptr": [

- "a23-215-0-136.deploy.static.akamaitechnologies.com"

], - "geo": {

- "continent": "North America",

- "country": "US",

- "tz": "America/Chicago",

- "location": {

- "lat": 37.751,

- "lon": -97.822

}, - "accuracy": 1000

}, - "privacy": {

- "vpn": false,

- "proxy": false,

- "tor": false

}, - "organization": "Example Technologies, Inc.",

- "domains": [

- "example.com",

- "example.net"

], - "domains_count": 2,

- "whois": {

- "@timestamp": "2025-01-07T05:17:39",

- "raw": "Raw WHOIS data in the form of a string will be here.\n\nThe rest of the document contains the same data in parsed form.",

- "ip": {

- "gte": "23.214.224.0",

- "lte": "23.215.8.255"

}, - "related_nets": [ ],

- "net": {

- "country": "US",

- "address": "145 Broadway",

- "city": "Cambridge",

- "created": "2013-07-12",

- "range": "23.192.0.0 - 23.223.255.255",

- "description": "Akamai Technologies, Inc.",

- "handle": "NET-23-192-0-0-1",

- "organization": "Akamai Technologies, Inc. (AKAMAI)",

- "name": "AKAMAI",

- "start_ip": "23.192.0.0",

- "cidr": [

- "23.192.0.0/11"

], - "net_size": 2097151,

- "state": "MA",

- "postal_code": "02142",

- "updated": "2013-08-09",

- "end_ip": "23.223.255.255",

- "contacts": {

- "phones": [

- "+1-617-274-7134",

- "+1-617-444-2535",

- "+1-617-444-0017"

]

}

}, - "asn": {

- "number": [

- "16625"

], - "registry": "arin",

- "country": "US",

- "name": "AKAMAI-AS",

- "cidr": "23.214.224.0/19",

- "updated": "2013-07-12"

}

}, - "ports": [

- {

- "protocol": "http",

- "prot4": "tcp",

- "port": 80,

- "prot7": "http"

}, - {

- "protocol": "https",

- "prot4": "tcp",

- "port": 443,

- "prot7": "http"

}

], - "software": [

- {

- "tag": [

- {

- "azure_cdn": {

- "version": ""

}, - "name": "azure_cdn",

- "description": "Azure Content Delivery Network (CDN) reduces load times, save bandwidth and speed responsiveness.",

- "fullname": "Azure CDN",

- "category": [

- "CDN"

]

}

],

}

], - "ioc": [

- {

- "lseen": "2024-01-19T00:00Z",

- "score": {

- "total": 63,

- "src": 71.41,

- "tags": 0.89,

- "frequency": 1

}, - "fseen": "2024-01-19T00:00Z",

- "@timestamp": "2024-08-19T00:00Z",

- "domain": "example.com",

- "fp": {

- "descr": "",

- "alarm": "false"

}, - "threat": [

- "duke_group"

], - "tags": [

- "malware"

]

}

], - "source": [

- {

- "id": 4701,

- "name": "perimeter-1741688290-9rc7tkern-demo-1-responses",

- "availability": "private",

- "label": "23.215.0.136, example.com"

}, - {

- "id": 4567,

- "name": "whois-domains-2025-01-27",

- "availability": "default"

}, - {

- "id": 4621,

- "name": "domains.dns-2025-03-06",

- "availability": "default"

}

]

}Caller’s Host Summary

Retrieves the latest available data for the IP address of the client making the request.

Use this method to obtain information about your own IP address.

query Parameters

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=ip fields=ptr[0],whois.abuse You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

| public_indices_only | boolean (public_indices_only) Default: false Example: public_indices_only=true By default, data is requested from both private and public indices, returning the most recent results. Use this query parameter to restrict the search to public indices only. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/host/" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 429

- 500

- 504

{- "type": "ip",

- "ip": "23.215.0.136",

- "ptr": [

- "a23-215-0-136.deploy.static.akamaitechnologies.com"

], - "geo": {

- "continent": "North America",

- "country": "US",

- "tz": "America/Chicago",

- "location": {

- "lat": 37.751,

- "lon": -97.822

}, - "accuracy": 1000

}, - "privacy": {

- "vpn": false,

- "proxy": false,

- "tor": false

}, - "organization": "Example Technologies, Inc.",

- "domains": [

- "example.com",

- "example.net"

], - "domains_count": 2,

- "whois": {

- "@timestamp": "2025-01-07T05:17:39",

- "raw": "Raw WHOIS data in the form of a string will be here.\n\nThe rest of the document contains the same data in parsed form.",

- "ip": {

- "gte": "23.214.224.0",

- "lte": "23.215.8.255"

}, - "related_nets": [ ],

- "net": {

- "country": "US",

- "address": "145 Broadway",

- "city": "Cambridge",

- "created": "2013-07-12",

- "range": "23.192.0.0 - 23.223.255.255",

- "description": "Akamai Technologies, Inc.",

- "handle": "NET-23-192-0-0-1",

- "organization": "Akamai Technologies, Inc. (AKAMAI)",

- "name": "AKAMAI",

- "start_ip": "23.192.0.0",

- "cidr": [

- "23.192.0.0/11"

], - "net_size": 2097151,

- "state": "MA",

- "postal_code": "02142",

- "updated": "2013-08-09",

- "end_ip": "23.223.255.255",

- "contacts": {

- "phones": [

- "+1-617-274-7134",

- "+1-617-444-2535",

- "+1-617-444-0017"

]

}

}, - "asn": {

- "number": [

- "16625"

], - "registry": "arin",

- "country": "US",

- "name": "AKAMAI-AS",

- "cidr": "23.214.224.0/19",

- "updated": "2013-07-12"

}

}, - "ports": [

- {

- "protocol": "http",

- "prot4": "tcp",

- "port": 80,

- "prot7": "http"

}, - {

- "protocol": "https",

- "prot4": "tcp",

- "port": 443,

- "prot7": "http"

}

], - "software": [

- {

- "tag": [

- {

- "azure_cdn": {

- "version": ""

}, - "name": "azure_cdn",

- "description": "Azure Content Delivery Network (CDN) reduces load times, save bandwidth and speed responsiveness.",

- "fullname": "Azure CDN",

- "category": [

- "CDN"

]

}

],

}

], - "ioc": [

- {

- "lseen": "2024-01-19T00:00Z",

- "score": {

- "total": 63,

- "src": 71.41,

- "tags": 0.89,

- "frequency": 1

}, - "fseen": "2024-01-19T00:00Z",

- "@timestamp": "2024-08-19T00:00Z",

- "domain": "example.com",

- "fp": {

- "descr": "",

- "alarm": "false"

}, - "threat": [

- "duke_group"

], - "tags": [

- "malware"

]

}

], - "source": [

- {

- "id": 4701,

- "name": "perimeter-1741688290-9rc7tkern-demo-1-responses",

- "availability": "private",

- "label": "23.215.0.136, example.com"

}, - {

- "id": 4567,

- "name": "whois-domains-2025-01-27",

- "availability": "default"

}, - {

- "id": 4621,

- "name": "domains.dns-2025-03-06",

- "availability": "default"

}

]

}Access continuously updated internet scan data collected by Netlas.

The same data available in the Netlas web interface via the Responses Search tool.

Responses Search

Searches for q in the selected indices and returns up to 20 search results, starting from the start+1 document.

❗️ This method allows retrieving only the first 10,000 search results.

Use it when the expected number of results is relatively low or to craft and refine a query. Use the Download endpoint to fetch results without pagination or quantity limitations.

query Parameters

| q required | string (q) Examples:

The query string used for searching. See Query Syntax for more details. |

| start | integer (start) [ 0 .. 9980 ] Default: 0 Example: start=20 Use for pagination. Offset from the first search result to return. |

| indices | Array of strings (indices) Default: "" Examples:

A list of indices where the search will be performed. ℹ️ Unlike other search tools, Responses search does not have a default index. |

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=ip fields=ptr[0],whois.abuse You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/responses/" \ -G \ --data-urlencode "q=host:example.com" \ --data-urlencode "fields=ip,uri" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "items": [

- {

- "highlight": {

- "host": "example.com"

}, - "data": {

- "last_updated": "2025-02-13T14:33:05.761Z",

- "jarm": "28d28d28d00028d00042d42d0000005af340c9af4dda1ac7f5ed68d47c4416",

- "isp": "Akamai Technologies",

- "ip": "23.192.228.84",

- "certificate": {

- "issuer_dn": "C=US, O=DigiCert Inc, CN=DigiCert Global G3 TLS ECC SHA384 2020 CA1",

- "fingerprint_md5": "c33979ff8bc19a94820d6804b3681881",

- "chain": [

- {

- "issuer_dn": "C=US, O=DigiCert Inc, OU=www.digicert.com, CN=DigiCert Global Root G3",

- "fingerprint_md5": "bf44da68b5abcb0a48c8471806ff9706",

- "signature": {

- "valid": false,

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "value": "MGUCMH4mWG7uiOwM3RVB7nq4mZlw0WJlT6AgnkexW8GyZzEdzHJ6ryJyQEJuZYT+h0sPGQIxAOa/1q40h1s/Z8cdqG/VEni15ocxRKldxrh4zM/v1DJYEf86hQY8HYRv0/X52jMcpA==",

- "self_signed": false

}, - "redacted": false,

- "subject": {

- "country": [

- "US"

], - "organization": [

- "DigiCert Inc"

], - "common_name": [

- "DigiCert Global G3 TLS ECC SHA384 2020 CA1"

]

}, - "serial_number": "14626237344301700912191253757342652550",

- "version": 3,

- "issuer": {

- "country": [

- "US"

], - "organization": [

- "DigiCert Inc"

], - "common_name": [

- "DigiCert Global Root G3"

], - "organizational_unit": [

- "www.digicert.com"

]

}, - "fingerprint_sha256": "0587d6bd2819587ab90fb596480a5793bd9f7506a3eace73f5eab366017fe259",

- "tbs_noct_fingerprint": "b01920744bbb76c9ab053e01e07b7e050e473d20f79f7bea435fafe43c9d242f",

- "tbs_fingerprint": "b01920744bbb76c9ab053e01e07b7e050e473d20f79f7bea435fafe43c9d242f",

- "extensions": {

- "subject_key_id": "8a23eb9e6bd7f9375df96d2139769aa167de10a8",

- "certificate_policies": [

- {

- "id": "2.16.840.1.114412.2.1"

}, - {

- "id": "2.23.140.1.1"

}, - {

- "id": "2.23.140.1.2.1"

}, - {

- "id": "2.23.140.1.2.2"

}, - {

- "id": "2.23.140.1.2.3"

}

], - "authority_key_id": "b3db48a4f9a1c5d8ae3641cc1163696229bc4bc6",

- "key_usage": {

- "digital_signature": true,

- "certificate_sign": true,

- "crl_sign": true,

- "value": 97

}, - "authority_info_access": {

}, - "basic_constraints": {

- "max_path_len": 0,

- "is_ca": true

}, - "extended_key_usage": {

- "client_auth": true,

- "server_auth": true

}

}, - "subject_dn": "C=US, O=DigiCert Inc, CN=DigiCert Global G3 TLS ECC SHA384 2020 CA1",

- "fingerprint_sha1": "9577f91fe86c27d9912129730e8166373fc2eeb8",

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "spki_subject_fingerprint": "a7cb399df47e982018276c3397790bdef648e6d87b2f7b90d551f719ac6098c4",

- "validity": {

- "length": 315532799,

- "start": "2021-04-14T00:00:00Z",

- "end": "2031-04-13T23:59:59Z"

}, - "validation_level": "EV"

}

], - "signature": {

- "valid": false,

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "value": "MGUCMQD5poJGU9tv5Vj67hq8/Jobt+9QMmo3wrCWtcPhem1PtAv4PTf4ED8VQSjd0PWLPfsCMGRjeOGy4sBbulawNu1f9DDGnqQ2wriOHX9GO9X/brSzFDAz8Yzu3T5PS4/Yv5jXZQ==",

- "self_signed": false

}, - "redacted": false,

- "subject": {

- "country": [

- "US"

], - "province": [

- "California"

], - "organization": [

- "Internet Corporation for Assigned Names and Numbers"

], - "locality": [

- "Los Angeles"

], - "common_name": [

- "*.example.com"

]

}, - "serial_number": "14416812407440461216471976375640436634",

- "version": 3,

- "issuer": {

- "country": [

- "US"

], - "organization": [

- "DigiCert Inc"

], - "common_name": [

- "DigiCert Global G3 TLS ECC SHA384 2020 CA1"

]

}, - "fingerprint_sha256": "455943cf819425761d1f950263ebf54755d8d684c25535943976f488bc79d23b",

- "tbs_noct_fingerprint": "bc8ace8a15de0d8136c6f642e1d1e367a3dd38d5534e3fc4f52c5ee6b86cb25d",

- "tbs_fingerprint": "ad7d5aa4244532a22369dfa25a851e180cb34a39d90efba98022f0f9832e0bd9",

- "extensions": {

- "subject_key_id": "f0c16a320decdac7ea8fcd0d6d191259d1be72ed",

- "crl_distribution_points": [

], - "authority_key_id": "8a23eb9e6bd7f9375df96d2139769aa167de10a8",

- "key_usage": {

- "key_agreement": true,

- "digital_signature": true,

- "value": 17

}, - "subject_alt_name": {

- "dns_names": [

- "*.example.com",

- "example.com"

]

}, - "signed_certificate_timestamps": [

- {

- "log_id": "DleUvPOuqT4zGyyZB7P3kN+bwj1xMiXdIaklrGHFTiE=",

- "signature": "BAMARTBDAh8kFw9aTHzSKTu4thbo4a81i8ng2Y5HZFdz26+IU8fpAiBS265R6cchPlQ1Yl98EFGrfW1QaLtkNNKuszR/jPVVrg==",

- "version": 0,

- "timestamp": 1736902885

}, - {

- "log_id": "ZBHEbKQS7KeJHKICLgC8q08oB9QeNSer6v7VA8l9zfA=",

- "signature": "BAMARjBEAiBwrujYB4VdUL4n/xuwR6u3IjBh/I3XIf8cuC862JXrFwIgcjBTLw4RoOLGJtTLKwxlXnXMKROHjdEbmXBRplscCXI=",

- "version": 0,

- "timestamp": 1736902885

}, - {

- "log_id": "SZybad4dfOz8Nt7Nh2SmuFuvCoeAGdFVUvvp6ynd+MM=",

- "signature": "BAMARzBFAiBoWHrvIRDaXCCbdfXqfaJaMRAUgjZvZ+k420FWJtlVbAIhAPmmyqNcNiwgRvWHKHRLxsE3c7i7awD3OKwoiViNmDzC",

- "version": 0,

- "timestamp": 1736902885

}

], - "authority_info_access": {

}, - "basic_constraints": {

- "is_ca": false

}, - "extended_key_usage": {

- "client_auth": true,

- "server_auth": true

}

}, - "subject_dn": "C=US, ST=California, L=Los Angeles, O=Internet Corporation for Assigned Names and Numbers, CN=*.example.com",

- "names": [

- "*.example.com",

- "example.com"

], - "fingerprint_sha1": "310db7af4b2bc9040c8344701aca08d0c69381e3",

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "spki_subject_fingerprint": "9d77c9a308deb7b5d91be7d8d5e10587bd9381a70913cfad1883b9bdcd825d43",

- "validity": {

- "length": 31622399,

- "start": "2025-01-15T00:00:00Z",

- "end": "2026-01-15T23:59:59Z"

}, - "validation_level": "OV"

}, - "host_type": "domain",

- "target": {

- "ip": "3.233.61.204",

- "type": "ip"

}, - "prot7": "http",

- "ptr": [

- "a23-192-228-84.deploy.static.akamaitechnologies.com"

], - "geo": {

- "continent": "North America",

- "country": "US",

- "city": "Santa Clara",

- "tz": "America/Los_Angeles",

- "location": {

- "accuracy": 20,

- "long": -121.9543,

- "lat": 37.353

}, - "postal": "95052",

- "subdivisions": [

- "CA"

]

}, - "path": "/",

- "protocol": "https",

- "prot4": "tcp",

- "@timestamp": "2025-02-13T14:33:05.761Z",

- "whois": {

- "related_nets": [ ],

- "net": {

- "country": "US",

- "address": "145 Broadway",

- "city": "Cambridge",

- "created": "2013-07-12",

- "range": "23.192.0.0 - 23.223.255.255",

- "description": "Akamai Technologies, Inc.",

- "handle": "NET-23-192-0-0-1",

- "organization": "Akamai Technologies, Inc. (AKAMAI)",

- "start_ip": "23.192.0.0",

- "name": "AKAMAI",

- "net_size": 2097151,

- "cidr": [

- "23.192.0.0/11"

], - "state": "MA",

- "postal_code": "02142",

- "updated": "2013-08-09",

- "end_ip": "23.223.255.255",

- "contacts": {

- "phones": [

- "+1-617-444-0017",

- "+1-617-444-2535",

- "+1-617-274-7134"

]

}

}, - "asn": {

- "registry": "arin",

- "country": "US",

- "number": [

- "20940"

], - "name": "AKAMAI-ASN1",

- "cidr": "23.192.164.0/24",

- "updated": "2013-07-12"

}

}, - "port": 443,

- "domain": [

- "postleitzahlsuche.de",

- "staging-one.maz-online.de"

], - "host": "example.com",

- "iteration": "38",

- "http": {

- "headers": {

- "date": [

- "Thu, 13 Feb 2025 13:56:57 GMT"

], - "accept_ranges": [

- "bytes"

], - "content_type": [

- "text/html"

], - "vary": [

- "Accept-Encoding"

], - "cache_control": [

- "max-age=503"

], - "connection": [

- "keep-alive"

], - "etag": [

- "\"84238dfc8092e5d9c0dac8ef93371a07:1736799080.121134\""

], - "last_modified": [

- "Mon, 13 Jan 2025 20:11:20 GMT"

], - "alt_svc": [

- "h3=\":443\"; ma=93600,h3-29=\":443\"; ma=93600,h3-Q050=\":443\"; ma=93600,quic=\":443\"; ma=93600; v=\"46,43\""

]

}, - "status_code": 200,

- "body_sha256": "ea8fac7c65fb589b0d53560f5251f74f9e9b243478dcb6b3ea79b5e36449c8d9",

- "meta": [

- "charset=\"utf-8\" ",

- "http-equiv=\"Content-type\" content=\"text/html; charset=utf-8\" ",

- "name=\"viewport\" content=\"width=device-width, initial-scale=1\" "

], - "http_version": {

- "major": 1,

- "minor": 1,

- "name": "HTTP/1.1"

}, - "body": "<!doctype html>\n<html>\n<head>\n <title>Example Domain</title>\n\n <meta charset=\"utf-8\" />\n <meta http-equiv=\"Content-type\" content=\"text/html; charset=utf-8\" />\n <meta name=\"viewport\" content=\"width=device-width, initial-scale=1\" />\n <style type=\"text/css\">\n body {\n background-color: #f0f0f2;\n margin: 0;\n padding: 0;\n font-family: -apple-system, system-ui, BlinkMacSystemFont, \"Segoe UI\", \"Open Sans\", \"Helvetica Neue\", Helvetica, Arial, sans-serif;\n \n }\n div {\n width: 600px;\n margin: 5em auto;\n padding: 2em;\n background-color: #fdfdff;\n border-radius: 0.5em;\n box-shadow: 2px 3px 7px 2px rgba(0,0,0,0.02);\n }\n a:link, a:visited {\n color: #38488f;\n text-decoration: none;\n }\n @media (max-width: 700px) {\n div {\n margin: 0 auto;\n width: auto;\n }\n }\n </style> \n</head>\n\n<body>\n<div>\n <h1>Example Domain</h1>\n <p>This domain is for use in illustrative examples in documents. You may use this\n domain in literature without prior coordination or asking for permission.</p>\n <p><a href=\"https://www.iana.org/domains/example\">More information...</a></p>\n</div>\n</body>\n</html>\n",

- "status_line": "200 OK",

- "title": "Example Domain",

- "content_length": -1

}, - "scan_date": "2025-02-13"

}

}

]

}Responses Count

Counts the number of documents matching the search query q in the selected indices.

- If there are fewer than 1,000 results, the method returns the exact count.

- If there are more than 1,000 results, the count is estimated with an error margin not exceeding 3%.

query Parameters

| q required | string (q) Example: q=ip:"23.192.0.0/11" The query string used for searching. See Query Syntax for more details. |

| indices | Array of strings (indices) Default: "" Examples:

A list of indices where the search will be performed. ℹ️ Unlike other search tools, Responses search does not have a default index. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/responses_count/" \ -G \ --data-urlencode 'q=ip:"23.192.0.0/11"' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 403

- 429

- 500

- 504

{- "count": 123

}Responses Download

Retrieves size search results matching the query q in the selected indices.

The Netlas SDK and CLI tool additionally include a download_all() method and an --all key that allow you to query all available results.

Request Body schema: application/jsonrequired

| q required | string (q) The query string used for searching. See Query Syntax for more details. |

| size required | integer Number of documents to download. Call corresponding Count endpoint to get a number of available documents. |

| indices | Array of strings (indices) A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields required | Array of strings (fields) You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type required | string (source_type) Enum: "include" "exclude" Specify

|

| type | string Default: "json" Enum: "json" "csv" Content type to download. |

Responses

Request samples

- Payload

- Curl

- Netlas CLI

- Python

{- "q": "ip:\"23.192.0.0/11\"",

- "size": 100,

- "fields": [

- "uri",

- "host_type",

- "port",

- "prot4",

- "prot7",

- "protocol"

], - "source_type": "include",

- "indices": [

- "2025-09-24"

]

}Response samples

- 200

- 400

- 401

- 402

- 403

- 429

- 500

- 504

[- {

- "data": {

- "path": "/ClientPortal/Login.aspx",

- "protocol": "https",

- "prot4": "tcp",

- "port": 443,

- "ip": "23.218.66.148",

- "host": "ww4.autotask.net",

- "host_type": "domain",

- "prot7": "http",

- "ptr": [

- "a23-218-66-148.deploy.static.akamaitechnologies.com"

]

}

}, - {

- "data": {

- "path": "/taxfreedom/",

- "protocol": "http",

- "prot4": "tcp",

- "port": 80,

- "ip": "23.218.68.10",

- "host": "turbotax.intuit.com",

- "host_type": "domain",

- "prot7": "http",

- "ptr": [

- "a23-218-68-10.deploy.static.akamaitechnologies.com"

]

}

}, - {

- "data": {

- "path": "/taxfreedom/",

- "protocol": "https",

- "prot4": "tcp",

- "port": 443,

- "ip": "23.218.68.10",

- "host": "turbotax.intuit.com",

- "host_type": "domain",

- "prot7": "http",

- "ptr": [

- "a23-218-68-10.deploy.static.akamaitechnologies.com"

]

}

}, - {

- "data": {

- "path": "/saml/login/8141776/87e9",

- "protocol": "https",

- "prot4": "tcp",

- "port": 443,

- "ip": "23.200.189.212",

- "host": "lastpass.com",

- "host_type": "domain",

- "prot7": "http",

- "ptr": [

- "a23-200-189-212.deploy.static.akamaitechnologies.com"

]

}

}, - {

- "data": {

- "path": "/61b1ba49b79d/intresseanmlan-mi-ntverket-2018-ett-arbetsseminarium-workshop-med-dr-stephen/",

- "protocol": "https",

- "prot4": "tcp",

- "port": 443,

- "ip": "23.209.18.242",

- "host": "mailchi.mp",

- "host_type": "domain",

- "prot7": "http",

- "ptr": [

- "a23-209-18-242.deploy.static.akamaitechnologies.com"

]

}

}

]Responses Facet Search

Searches for q in the selected indices and groups results by the facets field.

query Parameters

| q required | string (q) Example: q=ip:"23.192.0.0/11" The query string used for searching. See Query Syntax for more details. |

| facets required | Array of strings (facets) Example: facets=protocol A list of fields to group search results by. |

| indices | Array of strings (indices) Default: "" Examples:

A list of indices where the search will be performed. ℹ️ Unlike other search tools, Responses search does not have a default index. |

| size | integer (facet_search_size) [ 1 .. 1000 ] Default: 100 Number of search results to return for each facet. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/responses_facet/" \ -G \ --data-urlencode 'q=ip:"23.192.0.0/11"' \ --data-urlencode 'facets=protocol' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "aggregations": [

- {

- "key": [

- "http"

], - "doc_count": 1675766

}, - {

- "key": [

- "https"

], - "doc_count": 1161498

}, - {

- "key": [

- "dns_tcp"

], - "doc_count": 15834

}, - {

- "key": [

- "dns"

], - "doc_count": 14602

}, - {

- "key": [

- "ssh"

], - "doc_count": 973

}

]

}Information about domain names, their corresponding IP addresses, and other types of DNS records.

The same data available in the Netlas web interface via the DNS Search tool.

Domains Search

Searches for q in the selected indices and returns up to 20 search results, starting from the start+1 document.

❗️ This method allows retrieving only the first 10,000 search results.

Use it when the expected number of results is relatively low or to craft and refine a query. Use the Download endpoint to fetch results without pagination or quantity limitations.

query Parameters

| q required | string (q) Examples:

The query string used for searching. See Query Syntax for more details. |

| start | integer (start) [ 0 .. 9980 ] Default: 0 Example: start=20 Use for pagination. Offset from the first search result to return. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=domain,a You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/domains/" \ -G \ --data-urlencode "q=domain:example.com" \ --data-urlencode "fields=a,aaaa,ns,mx,txt" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "items": [

- {

- "data": {

- "txt": [

- "_k2n1y4vw3qtb4skdx9e7dxt97qrmmq9",

- "v=spf1 -all"

], - "a": [

- "23.192.228.80",

- "23.192.228.84",

- "23.215.0.136",

- "96.7.128.175",

- "23.215.0.138",

- "96.7.128.198"

], - "last_updated": "2025-02-18T20:14:08.642Z",

- "@timestamp": "2025-02-18T20:14:08.642Z",

- "level": 2,

- "zone": "com",

- "ns": [

- "a.iana-servers.net",

- "b.iana-servers.net"

], - "domain": "example.com",

- "aaaa": [

- "2600:1408:ec00:36::1736:7f24",

- "2600:1406:bc00:53::b81e:94ce",

- "2600:1406:3a00:21::173e:2e66",

- "2600:1406:bc00:53::b81e:94c8",

- "2600:1408:ec00:36::1736:7f31",

- "2600:1406:3a00:21::173e:2e65"

]

}

}

]

}Domains Count

Counts the number of documents matching the search query q in the selected indices.

- If there are fewer than 1,000 results, the method returns the exact count.

- If there are more than 1,000 results, the count is estimated with an error margin not exceeding 3%.

query Parameters

| q required | string (q) Example: q=domain:*.example.com a:* The query string used for searching. See Query Syntax for more details. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/domains_count/" \ -G \ --data-urlencode "q=domain:*.example.com a:*" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 403

- 429

- 500

- 504

{- "count": 123

}Domains Download

Retrieves size search results matching the query q in the selected indices.

The Netlas SDK and CLI tool additionally include a download_all() method and an --all key that allow you to query all available results.

Request Body schema: application/jsonrequired

| q required | string (q) The query string used for searching. See Query Syntax for more details. |

| size required | integer Number of documents to download. Call corresponding Count endpoint to get a number of available documents. |

| indices | Array of strings (indices) A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields required | Array of strings (fields) You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type required | string (source_type) Enum: "include" "exclude" Specify

|

| type | string Default: "json" Enum: "json" "csv" Content type to download. |

Responses

Request samples

- Payload

- Curl

- Netlas CLI

- Python

{- "q": "domain:*.example.com a:*",

- "size": 100,

- "fields": [

- "a",

- "domain"

], - "source_type": "include"

}Response samples

- 200

- 400

- 401

- 402

- 403

- 429

- 500

- 504

[- {

- "data": {

- "a": [

- "83.235.76.17"

], - "domain": "idn3241.example.com"

}

}, - {

- "data": {

- "a": [

- "143.244.220.150"

], - "domain": "skadowskyu.example.com"

}

}, - {

- "data": {

- "a": [

- "212.109.215.174",

- "212.109.215.175"

], - "domain": "server9.example.com"

}

}, - {

- "data": {

- "a": [

- "83.235.76.17"

], - "domain": "del1.example.com"

}

}, - {

- "data": {

- "a": [

- "212.109.215.174",

- "212.109.215.175"

], - "domain": "n.parfionov2017.example.com"

}

}

]Domains Facet Search

Searches for q in the selected indices and groups results by the facets field.

query Parameters

| q required | string (q) Example: q=level:2 The query string used for searching. See Query Syntax for more details. |

| facets required | Array of strings (facets) Example: facets=zone A list of fields to group search results by. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| size | integer (facet_search_size) [ 1 .. 1000 ] Default: 100 Number of search results to return for each facet. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/domains_facet/" \ -G \ --data-urlencode 'q=level:2' \ --data-urlencode 'facets=zone' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "aggregations": [

- {

- "key": [

- "com"

], - "doc_count": 382078579

}, - {

- "key": [

- "net"

], - "doc_count": 21890693

}, - {

- "key": [

- "org"

], - "doc_count": 17769023

}, - {

- "key": [

- "top"

], - "doc_count": 15855678

}, - {

- "key": [

- "tk"

], - "doc_count": 12662280

}

]

}Provides information on IP address ownership, network provider details, contact information, and other WHOIS data.

The same data available in the Netlas web interface via the IP WHOIS Search tool.

IP WHOIS Search

Searches for q in the selected indices and returns up to 20 search results, starting from the start+1 document.

❗️ This method allows retrieving only the first 10,000 search results.

Use it when the expected number of results is relatively low or to craft and refine a query. Use the Download endpoint to fetch results without pagination or quantity limitations.

query Parameters

| q required | string (q) Examples:

The query string used for searching. See Query Syntax for more details. |

| start | integer (start) [ 0 .. 9980 ] Default: 0 Example: start=20 Use for pagination. Offset from the first search result to return. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=ip fields=ptr[0],whois.abuse You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/whois_ip/" \ -G \ --data-urlencode "q=ip:23.215.0.136" \ --data-urlencode "fields=net,asn" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "items": [

- {

- "data": {

- "@timestamp": "2025-01-07T05:17:39",

- "raw": "Raw WHOIS data in the form of a string will be here.\n\nThe rest of the document contains the same data in parsed form.",

- "ip": {

- "gte": "23.214.224.0",

- "lte": "23.215.8.255"

}, - "related_nets": [ ],

- "net": {

- "country": "US",

- "address": "145 Broadway",

- "city": "Cambridge",

- "created": "2013-07-12",

- "range": "23.192.0.0 - 23.223.255.255",

- "description": "Akamai Technologies, Inc.",

- "handle": "NET-23-192-0-0-1",

- "organization": "Akamai Technologies, Inc. (AKAMAI)",

- "name": "AKAMAI",

- "start_ip": "23.192.0.0",

- "cidr": [

- "23.192.0.0/11"

], - "net_size": 2097151,

- "state": "MA",

- "postal_code": "02142",

- "updated": "2013-08-09",

- "end_ip": "23.223.255.255",

- "contacts": {

- "phones": [

- "+1-617-274-7134",

- "+1-617-444-2535",

- "+1-617-444-0017"

]

}

}, - "asn": {

- "number": [

- "16625"

], - "registry": "arin",

- "country": "US",

- "name": "AKAMAI-AS",

- "cidr": "23.214.224.0/19",

- "updated": "2013-07-12"

}

}

}

]

}IP WHOIS Count

Counts the number of documents matching the search query q in the selected indices.

- If there are fewer than 1,000 results, the method returns the exact count.

- If there are more than 1,000 results, the count is estimated with an error margin not exceeding 3%.

query Parameters

| q required | string (q) Example: q=net.country:VA The query string used for searching. See Query Syntax for more details. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/whois_ip_count/" \ -G \ --data-urlencode "q=net.country:VA" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 403

- 429

- 500

- 504

{- "count": 123

}IP WHOIS Download

Retrieves size search results matching the query q in the selected indices.

The Netlas SDK and CLI tool additionally include a download_all() method and an --all key that allow you to query all available results.

Request Body schema: application/jsonrequired

| q required | string (q) The query string used for searching. See Query Syntax for more details. |

| size required | integer Number of documents to download. Call corresponding Count endpoint to get a number of available documents. |

| indices | Array of strings (indices) A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields required | Array of strings (fields) You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type required | string (source_type) Enum: "include" "exclude" Specify

|

| type | string Default: "json" Enum: "json" "csv" Content type to download. |

Responses

Request samples

- Payload

- Curl

- Netlas CLI

- Python

{- "q": "net.country:VA",

- "size": 100,

- "fields": [

- "abuse",

- "asn",

- "net"

], - "source_type": "include"

}Response samples

- 200

- 400

- 401

- 402

- 403

- 429

- 500

- 504

[- {

- "data": {

- "net": {

- "country": "VA",

- "address": "Securebit AG\nIndustriestrasse 3\n6345 Neuheim\nSwitzerland",

- "created": "2020-02-02T02:04:48Z",

- "name": "VA-SECUREBIT-20200202",

- "start_ip": "194.50.99.237",

- "range": "194.50.99.237 - 194.50.99.237",

- "description": "Securebit Network Holy See (Vatican City State)",

- "cidr": [

- "194.50.99.237/32"

], - "handle": "SBAC-RIPE",

- "net_size": 0,

- "updated": "2020-02-02T02:04:48Z",

- "end_ip": "194.50.99.237"

}, - "asn": {

- "number": [

- "34800"

], - "registry": "ripencc",

- "country": "CH",

- "name": "SBAG Securebit Autonomous System",

- "cidr": "194.50.99.0/24",

- "updated": "2019-11-21"

}

}

}, - {

- "data": {

- "net": {

- "country": "VA",

- "address": "Via della Scrofa, 70\n00186\nRoma\nITALY",

- "created": "2002-04-11T09:28:47Z",

- "range": "193.43.128.0 - 193.43.131.255",

- "handle": "MC30359-RIPE",

- "organization": "URBE: Unione Romana Biblioteche Ecclesiastiche",

- "name": "URBE-NET",

- "start_ip": "193.43.128.0",

- "cidr": [

- "193.43.128.0/22"

], - "net_size": 1023,

- "updated": "2016-05-04T08:55:35Z",

- "end_ip": "193.43.131.255",

- "remarks": "Pontifical University of the Holy Cross\nPontificia Universita della Santa Croce\nRome",

- "contacts": {

- "persons": [

- "Massimo Cuccu",

- "Salvatore Toribio"

], - "phones": [

- "+390683396190",

- "+39 06681641",

- "+39 06 681641"

]

}

}, - "asn": {

- "number": [

- "8978"

], - "registry": "ripencc",

- "country": "IT",

- "name": "ASN-HOLYSEE Holy See Secretariat of State Department of Telecommunications",

- "cidr": "193.43.128.0/22",

- "updated": "1995-04-04"

}

}

}, - {

- "data": {

- "net": {

- "country": "VA",

- "address": "Cortile del Belvedere SNC\n00120\nVatican City\nHOLY SEE (VATICAN CITY STATE)",

- "created": "1970-01-01T00:00:00Z",

- "range": "193.43.102.0 - 193.43.103.255",

- "handle": "MM56992-RIPE",

- "organization": "Biblioteca Apostolica Vaticana",

- "name": "VATICAN-LIBRARY-NET",

- "start_ip": "193.43.102.0",

- "cidr": [

- "193.43.102.0/23"

], - "net_size": 511,

- "updated": "2022-10-04T12:46:18Z",

- "end_ip": "193.43.103.255",

- "remarks": "Multiple Interconnected Servers and LANs",

- "contacts": {

- "persons": [

- "Manlio Miceli"

], - "phones": [

- "+390669879478"

]

}

}, - "asn": {

- "number": [

- "61160"

], - "registry": "ripencc",

- "country": "VA",

- "name": "ASN-VATLIB",

- "cidr": "193.43.102.0/23",

- "updated": "1995-02-06"

}

}

}, - {

- "data": {

- "net": {

- "country": "VA",

- "address": "5, Via della Conciliazione\n00120\nVatican City\nHOLY SEE (VATICAN CITY STATE)",

- "created": "2024-08-22T07:59:09Z",

- "name": "DPC-Guest-Users",

- "start_ip": "185.152.69.252",

- "range": "185.152.69.252 - 185.152.69.255",

- "cidr": [

- "185.152.69.252/30"

], - "handle": "SC18373-RIPE",

- "net_size": 3,

- "updated": "2024-08-22T07:59:09Z",

- "end_ip": "185.152.69.255",

- "contacts": {

- "persons": [

- "net admin"

], - "phones": [

- "+393346900701"

]

}

}, - "asn": {

- "number": [

- "202865"

], - "registry": "ripencc",

- "country": "VA",

- "name": "SPC",

- "cidr": "185.152.69.0/24",

- "updated": "2016-05-17"

}

}

}, - {

- "data": {

- "net": {

- "country": "VA",

- "address": "Casina Pio IV, 00120 Citt del Vaticano",

- "created": "2024-08-22T07:58:09Z",

- "name": "PAS-Users",

- "start_ip": "185.152.69.250",

- "range": "185.152.69.250 - 185.152.69.251",

- "cidr": [

- "185.152.69.250/31"

], - "handle": "LR9247-RIPE",

- "net_size": 1,

- "updated": "2024-08-22T07:58:09Z",

- "end_ip": "185.152.69.251",

- "contacts": {

- "persons": [

- "Lorenzo Rumori",

- "Nicola Riccardi"

], - "phones": [

- "+39 0669883451"

]

}

}, - "asn": {

- "number": [

- "202865"

], - "registry": "ripencc",

- "country": "VA",

- "name": "SPC",

- "cidr": "185.152.69.0/24",

- "updated": "2016-05-17"

}

}

}

]IP WHOIS Facet Search

Searches for q in the selected indices and groups results by the facets field.

query Parameters

| q required | |

| facets required | Array of strings (facets) Example: facets=net.country A list of fields to group search results by. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| size | integer (facet_search_size) [ 1 .. 1000 ] Default: 100 Number of search results to return for each facet. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/whois_ip_facet/" \ -G \ --data-urlencode 'q=*' \ --data-urlencode 'facets=net.country' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "aggregations": [

- {

- "key": [

- "US"

], - "doc_count": 3811776

}, - {

- "key": [

- "IT"

], - "doc_count": 762034

}, - {

- "key": [

- "GB"

], - "doc_count": 732744

}, - {

- "key": [

- "JP"

], - "doc_count": 614086

}, - {

- "key": [

- "FR"

], - "doc_count": 574712

}

]

}Information on domain ownership, registrar details, contact information, registration dates, expiration dates, and other WHOIS data.

The same data available in the Netlas web interface via the Domain WHOIS Search tool.

Domain WHOIS Search

Searches for q in the selected indices and returns up to 20 search results, starting from the start+1 document.

❗️ This method allows retrieving only the first 10,000 search results.

Use it when the expected number of results is relatively low or to craft and refine a query. Use the Download endpoint to fetch results without pagination or quantity limitations.

query Parameters

| q required | string (q) Examples:

The query string used for searching. See Query Syntax for more details. |

| start | integer (start) [ 0 .. 9980 ] Default: 0 Example: start=20 Use for pagination. Offset from the first search result to return. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=ip fields=ptr[0],whois.abuse You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/whois_domains/" \ -G \ --data-urlencode "q=domain:example.com" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "items": [

- {

- "data": {

- "server": "whois.iana.org",

- "last_updated": "2024-05-05T01:45:12.744Z",

- "extension": "com",

- "@timestamp": "2024-05-05T01:45:12.744Z",

- "punycode": "example.com",

- "level": 2,

- "zone": "com",

- "domain": "example.com",

- "name": "example",

- "raw": "Raw WHOIS data in the form of a string will be here.\n\nThe rest of the document contains the same data in parsed form.",

- "created_date": "1992-01-01T00:00:00.000Z",

- "extracted_domain": "example.com"

}

}

]

}Domain WHOIS Count

Counts the number of documents matching the search query q in the selected indices.

- If there are fewer than 1,000 results, the method returns the exact count.

- If there are more than 1,000 results, the count is estimated with an error margin not exceeding 3%.

query Parameters

| q required | string (q) Example: q=registrant.organization:"Meta Platforms" The query string used for searching. See Query Syntax for more details. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/whois_domains_count/" \ -G \ --data-urlencode 'q=registrant.organization:"Meta Platforms"' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 403

- 429

- 500

- 504

{- "count": 123

}Domain WHOIS Download

Retrieves size search results matching the query q in the selected indices.

The Netlas SDK and CLI tool additionally include a download_all() method and an --all key that allow you to query all available results.

Request Body schema: application/jsonrequired

| q required | string (q) The query string used for searching. See Query Syntax for more details. |

| size required | integer Number of documents to download. Call corresponding Count endpoint to get a number of available documents. |

| indices | Array of strings (indices) A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields required | Array of strings (fields) You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type required | string (source_type) Enum: "include" "exclude" Specify

|

| type | string Default: "json" Enum: "json" "csv" Content type to download. |

Responses

Request samples

- Payload

- Curl

- Netlas CLI

- Python

{- "q": "registrant.organization:\"Meta Platforms\"",

- "size": 100,

- "fields": [

- "domain",

- "registrant",

- "registrar",

- "created_date",

- "updated_date"

], - "source_type": "include"

}Response samples

- 200

- 400

- 401

- 402

- 403

- 429

- 500

- 504

[- {

- "data": {

- "registrar": {

- "phone": "+1.6503087004",

- "name": "RegistrarSEC, LLC",

- "id": "2475",

- "email": "[email protected]"

}, - "stats": {

- "retries": 0,

- "quota_retries": 1,

- "parser": "no_error",

- "was_queued": true,

- "error": "no_error",

- "total_time": 3212224099

}, - "domain": "facebookinstagram.club",

- "created_date": "2021-01-01T04:31:04.000Z",

- "registrant": {

- "country": "US",

- "province": "CA",

- "phone": "+1.6505434800",

- "city": "Menlo Park",

- "street": "1601 Willow Rd",

- "organization": "Meta Platforms, Inc.",

- "id": "CFD32E1A5A4214BE1B361B507C8F159E1-NSR",

- "postal_code": "94025"

}, - "updated_date": "2024-10-08T09:00:11.000Z"

}

}, - {

- "data": {

- "registrar": {

- "phone": "+1.6503087004",

- "name": "RegistrarSEC, LLC",

- "id": "2475",

- "email": "[email protected]"

}, - "stats": {

- "retries": 3,

- "quota_retries": 2,

- "parser": "no_error",

- "was_queued": false,

- "error": "no_error",

- "total_time": 61186643216

}, - "domain": "playit.games",

- "created_date": "2018-12-24T18:49:39.000Z",

- "registrant": {

- "country": "US",

- "province": "CA",

- "phone": "+1.6505434800",

- "city": "Menlo Park",

- "street": "1601 Willow Rd",

- "organization": "Meta Platforms, Inc.",

- "id": "d2d0ffd8525b47ac980ed1b6cd94b53a-DONUTS",

- "postal_code": "94025"

}, - "updated_date": "2024-10-01T09:00:17.000Z"

}

}, - {

- "data": {

- "registrar": {

- "phone": "+1.6503087004",

- "name": "RegistrarSEC, LLC",

- "id": "2475",

- "email": "[email protected]"

}, - "stats": {

- "retries": 0,

- "quota_retries": 0,

- "parser": "no_error",

- "was_queued": false,

- "error": "no_error",

- "total_time": 1640247726

}, - "domain": "armature.biz",

- "created_date": "2021-11-16T15:58:58.000Z",

- "registrant": {

- "country": "US",

- "province": "CA",

- "phone": "+1.6505434800",

- "city": "Menlo Park",

- "street": "1601 Willow Rd",

- "organization": "Meta Platforms, Inc.",

- "id": "C4AA0F510959A485B9F6B98358B3A51B2-NSR",

- "postal_code": "94025"

}, - "updated_date": "2022-10-02T18:52:24.000Z"

}

}, - {

- "data": {

- "registrar": {

- "phone": "+1.6503087004",

- "name": "RegistrarSEC, LLC",

- "id": "2475",

- "email": "[email protected]"

}, - "stats": {

- "retries": 3,

- "quota_retries": 2,

- "parser": "no_error",

- "was_queued": false,

- "error": "no_error",

- "total_time": 61653355239

}, - "domain": "metaquest.engineering",

- "created_date": "2022-01-27T19:41:55.000Z",

- "registrant": {

- "country": "US",

- "province": "CA",

- "phone": "+1.6505434800",

- "city": "Menlo Park",

- "street": "1601 Willow Rd",

- "organization": "Meta Platforms, Inc.",

- "id": "71771827efe4428d9173f02838801b85-DONUTS",

- "postal_code": "94025"

}, - "updated_date": "2024-11-04T09:01:17.000Z"

}

}, - {

- "data": {

- "registrar": {

- "phone": "+1.6503087004",

- "name": "RegistrarSEC, LLC",

- "id": "2475",

- "email": "[email protected]"

}, - "stats": {

- "retries": 1,

- "quota_retries": 0,

- "parser": "no_error",

- "was_queued": false,

- "error": "no_error",

- "total_time": 13076483862

}, - "domain": "metabankbilling.mobi",

- "created_date": "2017-03-01T18:16:53.000Z",

- "registrant": {

- "country": "US",

- "province": "CA",

- "phone": "+1.6505434800",

- "city": "Menlo Park",

- "street": "1601 Willow Rd",

- "organization": "Meta Platforms, Inc.",

- "id": "dc3017e992ae43f4a17b2e75e0727f5c-DONUTS",

- "postal_code": "94025"

}, - "updated_date": "2024-12-07T09:00:32.000Z"

}

}

]Domain WHOIS Facet Search

Searches for q in the selected indices and groups results by the facets field.

query Parameters

| q required | string (q) Example: q=level:2 The query string used for searching. See Query Syntax for more details. |

| facets required | Array of strings (facets) Example: facets=zone A list of fields to group search results by. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| size | integer (facet_search_size) [ 1 .. 1000 ] Default: 100 Number of search results to return for each facet. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/whois_domains_facet/" \ -G \ --data-urlencode 'q=level:2' \ --data-urlencode 'facets=zone' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "aggregations": [

- {

- "key": [

- "com"

], - "doc_count": 377917162

}, - {

- "key": [

- "net"

], - "doc_count": 21765983

}, - {

- "key": [

- "org"

], - "doc_count": 19341321

}, - {

- "key": [

- "xyz"

], - "doc_count": 13838761

}, - {

- "key": [

- "de"

], - "doc_count": 12480920

}

]

}A collection of x.509 certificates gathered from various sources.

The same data available in the Netlas web interface via the Certificates Search tool.

Certificates Search

Searches for q in the selected indices and returns up to 20 search results, starting from the start+1 document.

❗️ This method allows retrieving only the first 10,000 search results.

Use it when the expected number of results is relatively low or to craft and refine a query. Use the Download endpoint to fetch results without pagination or quantity limitations.

query Parameters

| q required | string (q) Example: q=certificate.subject_dn:"example.com" The query string used for searching. See Query Syntax for more details. |

| start | integer (start) [ 0 .. 9980 ] Default: 0 Example: start=20 Use for pagination. Offset from the first search result to return. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= ℹ️ Netlas stores all collected certificates in a single default index. |

| fields | Array of strings (fields) Default: "*" Examples: fields=* fields=ip fields=ptr[0],whois.abuse You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type | string (source_type) Default: "include" Enum: "include" "exclude" Specify

|

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/certs/" \ -G \ --data-urlencode "q=certificate.subject_dn:example.com" \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 402

- 403

- 429

- 500

- 504

{- "items": [

- {

- "data": {

- "issuer_dn": "C=US, O=DigiCert Inc, CN=DigiCert Global G3 TLS ECC SHA384 2020 CA1",

- "fingerprint_md5": "c33979ff8bc19a94820d6804b3681881",

- "chain": [

- {

- "issuer_dn": "C=US, O=DigiCert Inc, OU=www.digicert.com, CN=DigiCert Global Root G3",

- "fingerprint_md5": "bf44da68b5abcb0a48c8471806ff9706",

- "redacted": false,

- "signature": {

- "valid": false,

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "value": "MGUCMH4mWG7uiOwM3RVB7nq4mZlw0WJlT6AgnkexW8GyZzEdzHJ6ryJyQEJuZYT+h0sPGQIxAOa/1q40h1s/Z8cdqG/VEni15ocxRKldxrh4zM/v1DJYEf86hQY8HYRv0/X52jMcpA==",

- "self_signed": false

}, - "subject": {

- "country": [

- "US"

], - "organization": [

- "DigiCert Inc"

], - "common_name": [

- "DigiCert Global G3 TLS ECC SHA384 2020 CA1"

]

}, - "serial_number": "14626237344301700912191253757342652550",

- "version": 3,

- "issuer": {

- "country": [

- "US"

], - "organization": [

- "DigiCert Inc"

], - "common_name": [

- "DigiCert Global Root G3"

], - "organizational_unit": [

- "www.digicert.com"

]

}, - "fingerprint_sha256": "0587d6bd2819587ab90fb596480a5793bd9f7506a3eace73f5eab366017fe259",

- "tbs_noct_fingerprint": "b01920744bbb76c9ab053e01e07b7e050e473d20f79f7bea435fafe43c9d242f",

- "extensions": {

- "subject_key_id": "8a23eb9e6bd7f9375df96d2139769aa167de10a8",

- "certificate_policies": [

- {

- "id": "2.16.840.1.114412.2.1"

}, - {

- "id": "2.23.140.1.1"

}, - {

- "id": "2.23.140.1.2.1"

}, - {

- "id": "2.23.140.1.2.2"

}, - {

- "id": "2.23.140.1.2.3"

}

], - "authority_key_id": "b3db48a4f9a1c5d8ae3641cc1163696229bc4bc6",

- "key_usage": {

- "digital_signature": true,

- "certificate_sign": true,

- "crl_sign": true,

- "value": 97

}, - "authority_info_access": {

}, - "basic_constraints": {

- "max_path_len": 0,

- "is_ca": true

}, - "extended_key_usage": {

- "client_auth": true,

- "server_auth": true

}

}, - "tbs_fingerprint": "b01920744bbb76c9ab053e01e07b7e050e473d20f79f7bea435fafe43c9d242f",

- "subject_dn": "C=US, O=DigiCert Inc, CN=DigiCert Global G3 TLS ECC SHA384 2020 CA1",

- "fingerprint_sha1": "9577f91fe86c27d9912129730e8166373fc2eeb8",

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "spki_subject_fingerprint": "a7cb399df47e982018276c3397790bdef648e6d87b2f7b90d551f719ac6098c4",

- "validity": {

- "length": 315532799,

- "start": "2021-04-14T00:00:00Z",

- "end": "2031-04-13T23:59:59Z"

}, - "validation_level": "EV"

}

], - "redacted": false,

- "signature": {

- "valid": false,

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "value": "MGUCMQD5poJGU9tv5Vj67hq8/Jobt+9QMmo3wrCWtcPhem1PtAv4PTf4ED8VQSjd0PWLPfsCMGRjeOGy4sBbulawNu1f9DDGnqQ2wriOHX9GO9X/brSzFDAz8Yzu3T5PS4/Yv5jXZQ==",

- "self_signed": false

}, - "subject": {

- "country": [

- "US"

], - "province": [

- "California"

], - "organization": [

- "Internet Corporation for Assigned Names and Numbers"

], - "locality": [

- "Los Angeles"

], - "common_name": [

- "*.example.com"

]

}, - "serial_number": "14416812407440461216471976375640436634",

- "version": 3,

- "issuer": {

- "country": [

- "US"

], - "organization": [

- "DigiCert Inc"

], - "common_name": [

- "DigiCert Global G3 TLS ECC SHA384 2020 CA1"

]

}, - "fingerprint_sha256": "455943cf819425761d1f950263ebf54755d8d684c25535943976f488bc79d23b",

- "tbs_noct_fingerprint": "bc8ace8a15de0d8136c6f642e1d1e367a3dd38d5534e3fc4f52c5ee6b86cb25d",

- "extensions": {

- "crl_distribution_points": [

], - "subject_key_id": "f0c16a320decdac7ea8fcd0d6d191259d1be72ed",

- "authority_key_id": "8a23eb9e6bd7f9375df96d2139769aa167de10a8",

- "key_usage": {

- "key_agreement": true,

- "digital_signature": true,

- "value": 17

}, - "subject_alt_name": {

- "dns_names": [

- "*.example.com",

- "example.com"

]

}, - "signed_certificate_timestamps": [

- {

- "log_id": "DleUvPOuqT4zGyyZB7P3kN+bwj1xMiXdIaklrGHFTiE=",

- "signature": "BAMARTBDAh8kFw9aTHzSKTu4thbo4a81i8ng2Y5HZFdz26+IU8fpAiBS265R6cchPlQ1Yl98EFGrfW1QaLtkNNKuszR/jPVVrg==",

- "version": 0,

- "timestamp": 1736902885

}, - {

- "log_id": "ZBHEbKQS7KeJHKICLgC8q08oB9QeNSer6v7VA8l9zfA=",

- "signature": "BAMARjBEAiBwrujYB4VdUL4n/xuwR6u3IjBh/I3XIf8cuC862JXrFwIgcjBTLw4RoOLGJtTLKwxlXnXMKROHjdEbmXBRplscCXI=",

- "version": 0,

- "timestamp": 1736902885

}, - {

- "log_id": "SZybad4dfOz8Nt7Nh2SmuFuvCoeAGdFVUvvp6ynd+MM=",

- "signature": "BAMARzBFAiBoWHrvIRDaXCCbdfXqfaJaMRAUgjZvZ+k420FWJtlVbAIhAPmmyqNcNiwgRvWHKHRLxsE3c7i7awD3OKwoiViNmDzC",

- "version": 0,

- "timestamp": 1736902885

}

], - "authority_info_access": {

}, - "basic_constraints": {

- "is_ca": false

}, - "extended_key_usage": {

- "client_auth": true,

- "server_auth": true

}

}, - "tbs_fingerprint": "ad7d5aa4244532a22369dfa25a851e180cb34a39d90efba98022f0f9832e0bd9",

- "names": [

- "*.example.com",

- "example.com"

], - "subject_dn": "C=US, ST=California, L=Los Angeles, O=Internet Corporation for Assigned Names and Numbers, CN=*.example.com",

- "fingerprint_sha1": "310db7af4b2bc9040c8344701aca08d0c69381e3",

- "signature_algorithm": {

- "name": "ECDSA-SHA384",

- "oid": "1.2.840.10045.4.3.3"

}, - "spki_subject_fingerprint": "9d77c9a308deb7b5d91be7d8d5e10587bd9381a70913cfad1883b9bdcd825d43",

- "validity": {

- "length": 31622399,

- "start": "2025-01-15T00:00:00Z",

- "end": "2026-01-15T23:59:59Z"

}, - "validation_level": "OV"

}

}

]

}Certificates Count

Counts the number of documents matching the search query q in the selected indices.

- If there are fewer than 1,000 results, the method returns the exact count.

- If there are more than 1,000 results, the count is estimated with an error margin not exceeding 3%.

query Parameters

| q required | string (q) Example: q=certificate.subject_dn:"example.com" The query string used for searching. See Query Syntax for more details. |

| indices | Array of strings (indices) Default: "" Examples: indices=2025-09-24,2025-09-02 indices= ℹ️ Netlas stores all collected certificates in a single default index. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -s -X GET \ "https://app.netlas.io/api/certs_count/" \ -G \ --data-urlencode 'q=certificate.subject_dn:example.com' \ -H "Authorization: Bearer $NETLAS_API_KEY"

Response samples

- 200

- 400

- 403

- 429

- 500

- 504

{- "count": 123

}Certificates Download

Retrieves size search results matching the query q in the selected indices.

The Netlas SDK and CLI tool additionally include a download_all() method and an --all key that allow you to query all available results.

Request Body schema: application/jsonrequired

| q required | string (q) The query string used for searching. See Query Syntax for more details. |

| size required | integer Number of documents to download. Call corresponding Count endpoint to get a number of available documents. |

| indices | Array of strings (indices) A list of indice labels to search in. If not provided, the search will be performed in the default (most relevant index). Call indices endpoint to get the list of available indices. |

| fields required | Array of strings (fields) You can control the amount of output data by specifying which fields to include or exclude in the response.

Use the |

| source_type required | string (source_type) Enum: "include" "exclude" Specify

|

| type | string Default: "json" Enum: "json" "csv" Content type to download. |

Responses

Request samples

- Payload

- Curl

- Netlas CLI

- Python

{- "q": "certificate.subject_dn:\"example.com\"",

- "size": 100,

- "fields": [

- "certificate.src",

- "certificate.fingerprint_sha1"

], - "source_type": "include"

}Response samples

- 200

- 400

- 401

- 402

- 403

- 429

- 500

- 504

[- {

- "data": {

- "certificate": {

- "fingerprint_sha1": "675073c9b39e172a36468b646c4838e7d91e11d9"

}

}

}, - {

- "data": {

- "certificate": {

- "fingerprint_sha1": "ff4d9c8b266e80992a4e7f0120a9633fe6e591bf"

}

}

}, - {

- "data": {

- "certificate": {

- "fingerprint_sha1": "5362de8d24304c95849ae914c90cf9e033588881"

}

}

}, - {

- "data": {

- "certificate": {

- "fingerprint_sha1": "c3d830026cb6a46f31038fb87b8eed1bb11d1724"

}

}

}, - {

- "data": {

- "certificate": {

- "src": "imaps://111.230.60.119:993",

- "fingerprint_sha1": "21e4591ced037590466857d9853a379edc712bef"

}

}

}

]Netlas organizes its data into indices. Each search tool has a dedicated set of indices with data collected on specific dates.

Responses search tool also provides access to private indices:

- Public indices: regular Netlas indices available to all Netlas users.

- Private indices: indices produced by using Private Scanner by you or shared with you by your teammates.

Request samples

- Curl

- Netlas CLI

- Python

curl -X GET "https://app.netlas.io/api/indices/" \ -H "content-type: application/json"

Response samples

- 200

- 500

[- {

- "id": 1001,

- "name": "string",

- "label": "2025-09-24",

- "scan_started_at": "2019-08-24T14:15:22Z",

- "scan_ended_at": "2019-08-24T14:15:22Z",

- "type": "responses",

- "availability": "public",

- "is_default": true,

- "speed": "slow",

- "state": "indexing",

- "count": 0

}

]Netlas stores collected data as JSON documents, organized into several data collections, such as Responses and Domains. Each data collection has its own mapping, which defines its document structure.

This group of methods provides access to the mapping of each data collection.

Get Mapping

Returns the field mapping for the default index in the {collection}.

Use this mapping to understand which fields are available and how they are typed, so you can craft precise search queries using Lucene Query Syntax.

Each field or subfield will include either a field_type or children property:

- A

field_typeindicates that the field is a leaf node in the mapping tree. - A

childrenproperty indicates a parent field that contains nested fields.

Example:

{

"leaf": {

"field_type": "type"

},

"parent": {

"children": [

{

"leaf": {

"field_type": "type"

}

}

]

}

}

❗️Note: This endpoint returns the search mapping, which may slightly differ from the structure of actual search results. Refer to the response examples for each data collection to see how fields are represented in real-world data.

path Parameters

| collection required | string (dataCollectionType) Enum: "responses" "domains" "whois_ip" "whois_domains" "certs" The collection for which mapping should be retrieved. |

Responses

Request samples

- Curl

- Netlas CLI

- Python

curl -X GET \ "https://app.netlas.io/api/mapping/responses/" \ -H "content-type: application/json"

Response samples

- 200

- 500

{- "ip": {

- "category": "addressing",

- "field_type": "ip"

}, - "domain": {

- "category": "addressing",

- "field_type": "wildcard"

}, - "host": {

- "category": "addressing",

- "field_type": "keyword"

}, - "host_type": {

- "category": "addressing",

- "field_type": "text"

}, - "path": {

- "category": "addressing",

- "field_type": "keyword"

}, - "port": {

- "category": "addressing",

- "field_type": "integer"

}, - "prot4": {

- "category": "addressing",

- "field_type": "keyword"

}, - "prot7": {

- "category": "addressing",

- "field_type": "keyword"

}, - "protocol": {

- "category": "addressing",

- "field_type": "keyword"

}, - "ptr": {

- "category": "addressing",

- "field_type": "text"

}, - "referer": {

- "category": "addressing",

- "field_type": "text"

}, - "target": {

- "category": "addressing",

- "children": [

- {

- "domain": {

- "field_type": "wildcard"

}

}, - {

- "ip": {

- "field_type": "ip"

}

}, - {

- "type": {

- "field_type": "text"

}

}

]

}, - "uri": {

- "category": "addressing",